Can I completely rely on a machine that autonomously pierces its tools into the head & neck region to remove the cancerous tumour?

Should I prefer an experienced surgeon {human} to do the same job?

Reference: https://in.pinterest.com/pin/492651646717734261/

Figure 01: We intend to build the systems that can support humans but not to replace them completely

I would prefer the machine if it acts like any intelligent human surgeon, but the key weakness in the current machine learning models is that they cannot generalise beyond the training distribution, where a human performs better than a machine. In other words, machines cannot handle situations beyond what it has been trained for. Humans use concepts of tumour size, spatial reasoning and continuity to explain what has happened, infer what is about to happen, and imagine what would happen in counterfactual situations.

The most fundamental aspects of intelligent cognitive human behaviour are their ability to learn from experience and reason from what has been learned. Can we build systems that can discover the tumour regions of any cancer at least as equivalent as any intelligent surgeon can? This is a path towards a broad AI where we can build machines having multi-tasking ability with sufficient explainability.

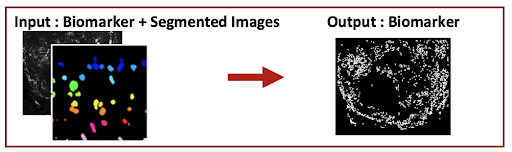

From the given medical images, generating radiologist-level diagnosis reports for clinical decision making is incredibly challenging because it is an extremely complicated task that involves visual perception and high-level reasoning processes. For visual perception, recent advances in deep learning research help select and extract features, construct new ones to diagnose a disease, and measure the predictive target; this helps physicians take the next steps efficiently. However, despite the impressive findings by the DL algorithms, they still lack the interpretable justifications to support their decision-making process. Because these algorithms conceal rationale for their conclusions, and these are intrinsically unexplainable. For example, in histopathology images, the deep learning algorithms have been successful in segmenting the nuclei and is also effective in automating the laborious process of manual counting and segmentation, but it cannot provide the explainable reasons behind its segmentation.

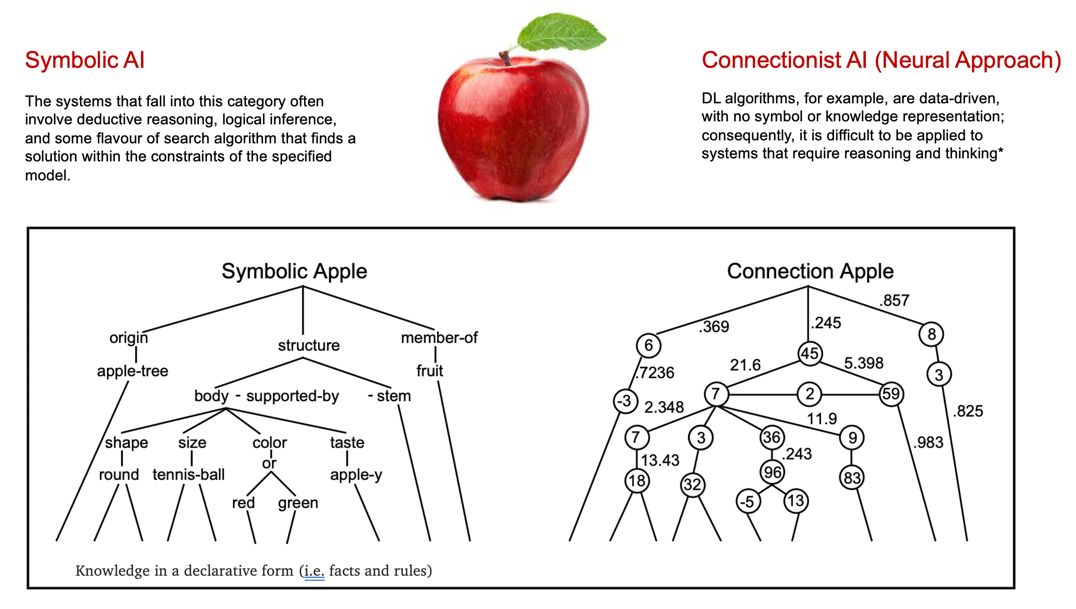

A majority of recent attempts to try and build methodologies to analyse the contributions of features to derive explanations behind the classifications/segmentations. While this is impressive, but at the core of AI, it is far from being solved. To perform human-like learning and symbolic logical reasoning, we need to combine robust learning in deep nets with reasoning and explainability using symbolic logical reasoning for networks. This hybrid approach can be termed as Neuro-Symbolic or Neural-symbolic or symbolic-subsymbolic as a subfield of AI.

Reference: Yoshua Bengio, Aaron Courville, and Pascal Vincent. “Representation learning: A review and new perspectives”. IEEE Trans. Pattern Analysis and Machine Intelligence (PAMI), 35(8):1798–1828, 2013

Figure 02: Two approaches to build AI systems (a) Symbolic AI and (b) Sub-Symbolic AI Connectionist AI

What is a symbolic approach? Historically there have been two different approaches to build AI systems. The symbolic approach builds smart systems through modelling the symbols. The term symbol has a venerable history in philosophy, linguistics, and other cognitive sciences. Many early AI advances utilized a symbolistic approach to AI programming, striving to create smart systems by modelling relationships and using symbols and programs to convey meaning.

Figure 03: Pathologists manually annotate the region of interest in the given Whole Slide Image (WSI).

To annotate one WSI requires 30-40 hrs of pathologists time, can we support this process with the Neuro-Symbolic Framework?

In clinical practices, on the visual perception side, deep learning helps in discovering the regions of interest, which minimize hours of manual inspection of blanket size histopathology images. While on the symbolic side, one can make use of deep pathology expert knowledge in design and function. Programmed death 1 (PD-1)/programmed death ligand-1 (PD-L1) immunotherapy is one of the most promising cancer treatments, relying on and helping the patient’s immune system fight cancers. It offers a personalized and less invasive alternative therapy. However, only a portion of patients with cancer responds to immunotherapy. In this scenario, the deep learning algorithm can be super helpful for clinicians to design and score the PD-L1, which can be used as an effective biomarker to screen the patients for PD-L1 targeted immunotherapy. For the cases where the DL fails to segment/classify the region (the DL model cannot be 100% accurate), we turn to the symbolic approach in conjunction with DL. A clinician hopes to negotiate on reasons for the errors (FPs or FNs) through defining statements in first-order logic that can be queried to check if that knowledge is satisfied by the trained network., this enables pathologists to trust and accept AI discoveries.

Figure 04: How accurately can we count cancer cells in the given patch of Whole Slide Image?

A primary concern in AI is related to the trustability of the intelligent system leveraging a sub-symbolic AI—i.e., exploiting approaches such as deep learning. Symbolic systems offer a human-understandable representation of their internal knowledge and processes. So, integrating them into sub-symbolic models – to promote the transparency of the resulting system – is the most prominent stimulus for Neuro-symbolic approaches. However, due to the freshness of the topic, well-established and coherent theories for Neuro-symbolic approaches are yet to be formulated.

In practice, pathologists use inductive reasoning to translate the black-box knowledge into their domain interpretation, and they are curious to see the evolution of the sub-symbolic discoveries when they introduce the domain knowledge into sub-symbolic systems. This acts as a feedback mechanism aimed at injecting some symbolic knowledge into a black box. This also helps in reducing the data that is needed to build the model. For example, it is not worth building a model on millions of data to discover a rule x>y when an expert already knows about it. So, instead of creating more datasets, an expert can encode this knowledge to the network; this can constrain and guide the model training.

We can build the neuro-symbolic hybrid systems either through integration, where the symbolic (logic-based) and sub-symbolic (neural nets) approaches blend to build a unified model, or combination, where the two approaches are separate blocks and jointly exploit to produce meaningful explainable intelligent systems.

In the context where the impacts of AI on human life is relevant, e.g., in discovering the growth of cancer cells and its interaction with other non-cancerous cells, it requires explainability, which is not only a desirable property but is, in some cases and will be soon a legal requirement.

“Success in creating effective AI could be the biggest event in the history of our civilization. Or the worst. We just don’t know. So we cannot know if we will be infinitely helped by AI, or ignored by it and side-lined, or conceivably destroyed by it,” Hawking said during the speech.

We intend to build systems that can support pathologists/clinicians but not replace them completely!